The internet as we know it, peering through a browser, is only just over twenty-five years old.

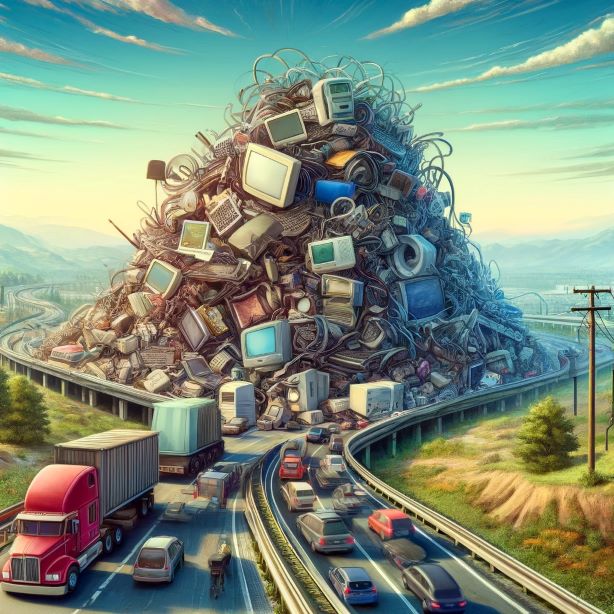

Netscape provided a means for legitimate businesses, entrepreneurs, socialites, not-so-legitimate businesses, and others with pure malicious intent to promote their wares. What has evolved on the internet is comparable to a globally pervasive wild west where the main thorough ways remain unpoliced and fifty percent of the traffic can be considered trash traffic.

What about filtering traffic?

Due to data privacy issues, Tier 1 providers are unable to filter good data from bad which has a direct impact on effective bandwidth. The image of little pimply faced bearded hacker hiding under black hoodies running continuous scans on the internet and distributing bots; to state sponsored corporations messing with democratic processes are both an emblem and reality.

How do you distinguish a Black Hat from a White Hat?

How do you separate a malicious scan from a solicited penetration test. You don’t; and can’t really without an investigation. Ideally, if the backbone could be sanitized, NSPs could keep the fast lanes clear and divert the data harvesters and black hats into the quicksand. We’ve seen this all before with net neutrality and we’d be naive to believe ISPs don’t employ some sort of filtration techniques on the internet, mainly for copyright infringement and illegal IPTV, but the legalities are complex.

So what can be done to make the internet more efficient?

Until this gets sorted (Oh Big Brother), we will continue to hear the words: prioritize, equal, throttle, neutrality, policies, shaping, policing, etc. and more bandwidth will continue to be thrown at the problem.